Can You Have Two Main Cameras On At Same Time Unity

Synchronize multiple cameras to capture at the aforementioned time

Equally low as 100µs filibuster tin can be reached with stock Raspberry Pi and no custom hardware.

Examples utilize-cases for synchronized video streams are:

- prototype stitching for panoramic photo/video, or image blending for example: HDR or low light imagery

- stereovision for 3D reconstruction and depth sensing applications

- multi-camera tracking for example: eye tracking with one camera dedicated to each center

Why is synchronization so important?

Let's run into an early stitching test with costless running cameras.

Each photographic camera is capturing at 90fps, hence at that place is about 11ms between each consecutive frame in the stream.

In the example above, if the right photographic camera is used equally reference time, the centre photographic camera is nearly 1ms late and the left photographic camera is more than than 5ms late.

During that delay, the car had time to motility further from left to right.

As y'all can come across, a unmarried millisecond is already enough for the car to look "chuncky" in the stitched image.

How to synchronize cameras?

Many smartphone SoCs have two–three photographic camera interfaces nowadays and tin capture on multiple cameras simultaneously. Also available is specific hardware to merge (aka multiplex) several cameras into a single stream (like the ArduCam "CamArray").

It is likewise achievable using uncomplicated Raspberry Pi and camera hardware with synchronization over Ethernet network. This solution is not equally precise every bit direct camera connections, but it avoids custom hardware and has the advantage of scaling to many more cameras (in theory there is no limit).

For example, see a demo of 360° video with 8 Raspberry Pi and camera using network-sync'd camera capture.

The last and perchance main benefit of network synchronization is the possibility to accept much larger distances betwixt the cameras. For instance in a auto where cameras can exist located in the left and right rear-view mirrors.

Building a test setup

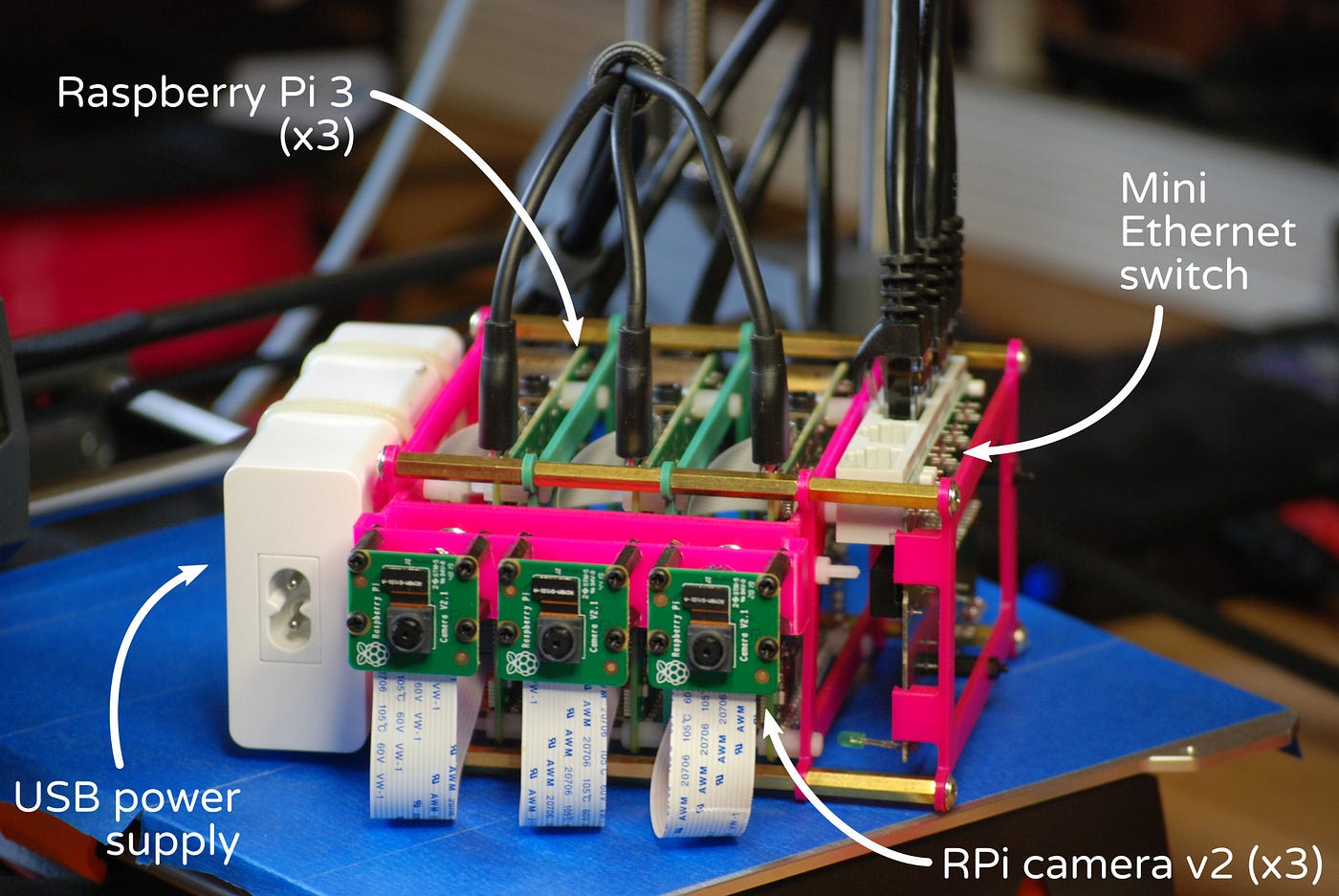

Three cameras are controlled by three independent Raspberry Pi 3 boards, connected together over Ethernet via a mini switch.

The network is used to exchange clock synchronization messages.

Does it really work well?

The first question should rather be: "How to test it?"

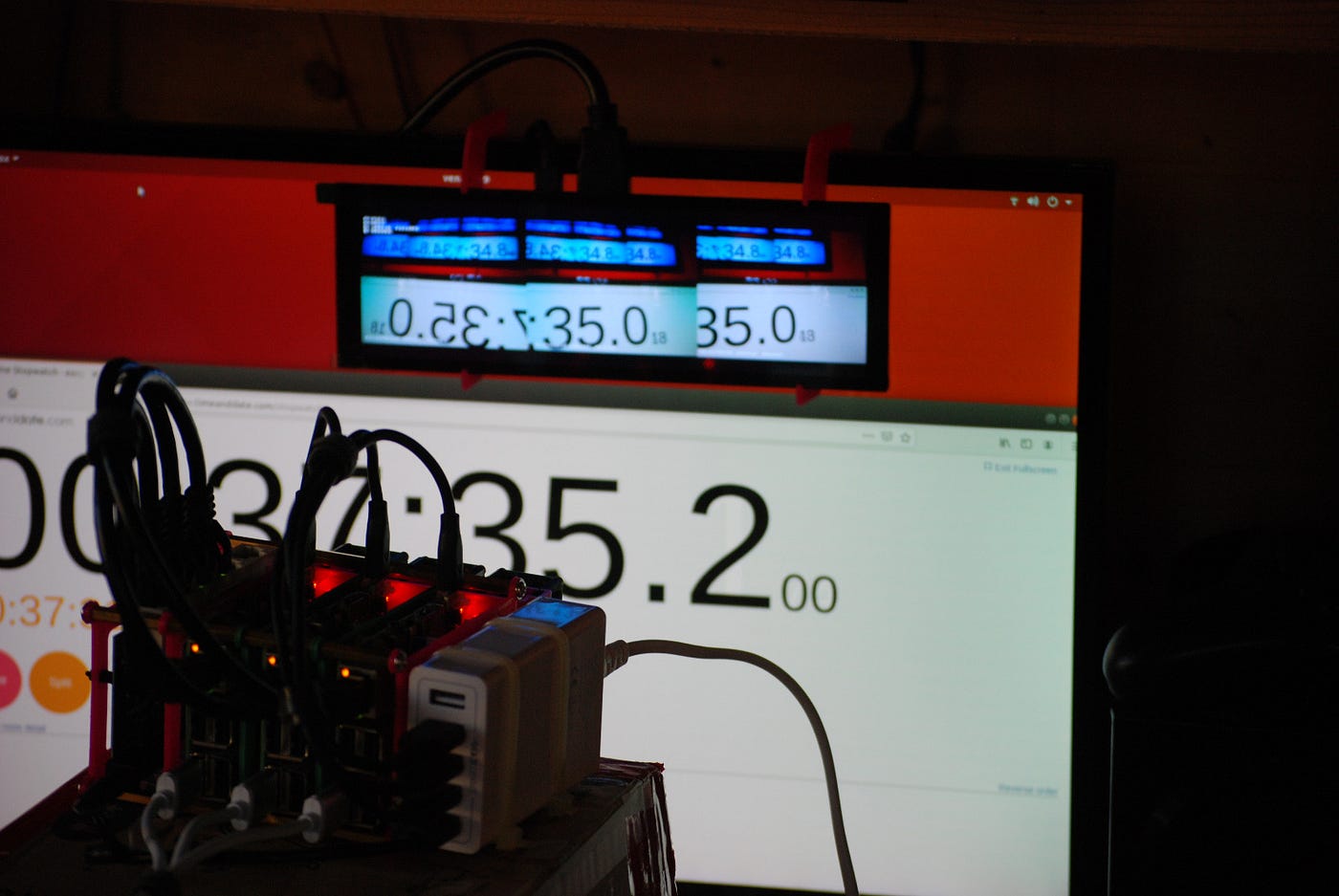

An thought is to display a stitched synchronized stream of a common running stopwatch recorded past all cameras. If the dissimilar cameras do not capture the same watch time image, they are not capturing in sync.

Note: sorry for the mirrored paradigm on the left, it's a configuration fault.

The image was captured at 17 seconds and 86 milliseconds. All 3 cameras show the aforementioned time, but keep in mind some limitations of this experiment:

- The computer is rendering the stopwatch at 60fps, therefore the video output just refreshes every ~16ms. In other words, information technology cannot update every millisecond.

- The screen pixels themselves need a few milliseconds to modify from blackness to white.

According to the stitcher statistics (white numbers in the lesser), the maximum delay between cameras barely exceeds 1ms during the video.

What is the output latency?

Latency is the time needed from an prototype captured at the camera to a stitched/synchronized output on the screen.

The little HMDI brandish attached over the larger display shows the live stitcher output (i.e., the synchronized output of the 3 camera).

Latency is piece of cake to detect out in this screenshot equally it is 200ms — 13ms = 187ms.

Conclusion

Synchronized video streams with Raspberry Pi could be used for many use-cases (eastward.g., stitching, 3D sensing, tracking, …) before investing in an expensive video multiplexer hardware.

Find the open-source modification of the RPi camera tool at:

https://github.com/inastitch/raspivid-inatech

Going further

How to reduce delay?

For the experiment presented here, the photographic camera were recording at 30fps.

When capturing at higher framerate, synchronization improves (at the toll of decreasing video quality).

Raspberry Pi camera v2 can capture up to 200fps, but the weak video encoder of the Raspberry Pi GPU cannot follow this pace. At 100fps, the quality is still adequate and synchronization delay is around 100µs.

Since delay also depends on network clock synchronization, amend PTP settings and hardware support for timestamping in the Ethernet interface would help.

How to reduce latency?

Latency depends on each steps of the stitching pipeline:

- [photographic camera board] capture frame

- [camera lath] encode frame

- [Ethernet] network delivery

- [stitcher lath] frame alignment

- [stitcher board] frame decoding

- [stitcher board] stitched frame rendering

- [display] display

In this experiment, many things could be exercise to ameliorate latency:

- Using gigabit Ethernet, a frame would be delivered faster.

- Using AVB Ethernet, a frame could exist delivered only in the correct time for rendering. No await in a buffer for the side by side render loop and no frame alignment needed.

- Using hardware decoding and hardware copy, a frame would be available in the GPU retention without any CPU copy.

- Using a loftier framerate display (e.g., 144Hz and more), rendered output would be pushed with less delay to the next screen refresh.

Source: https://medium.com/inatech/synchronize-multiple-cameras-to-capture-at-the-same-time-c285b520bd87

Posted by: beckpasm1937.blogspot.com

0 Response to "Can You Have Two Main Cameras On At Same Time Unity"

Post a Comment